16 Oct 2023

Min Read

Always Fresh: Snowflake and DeltaStream Integrate

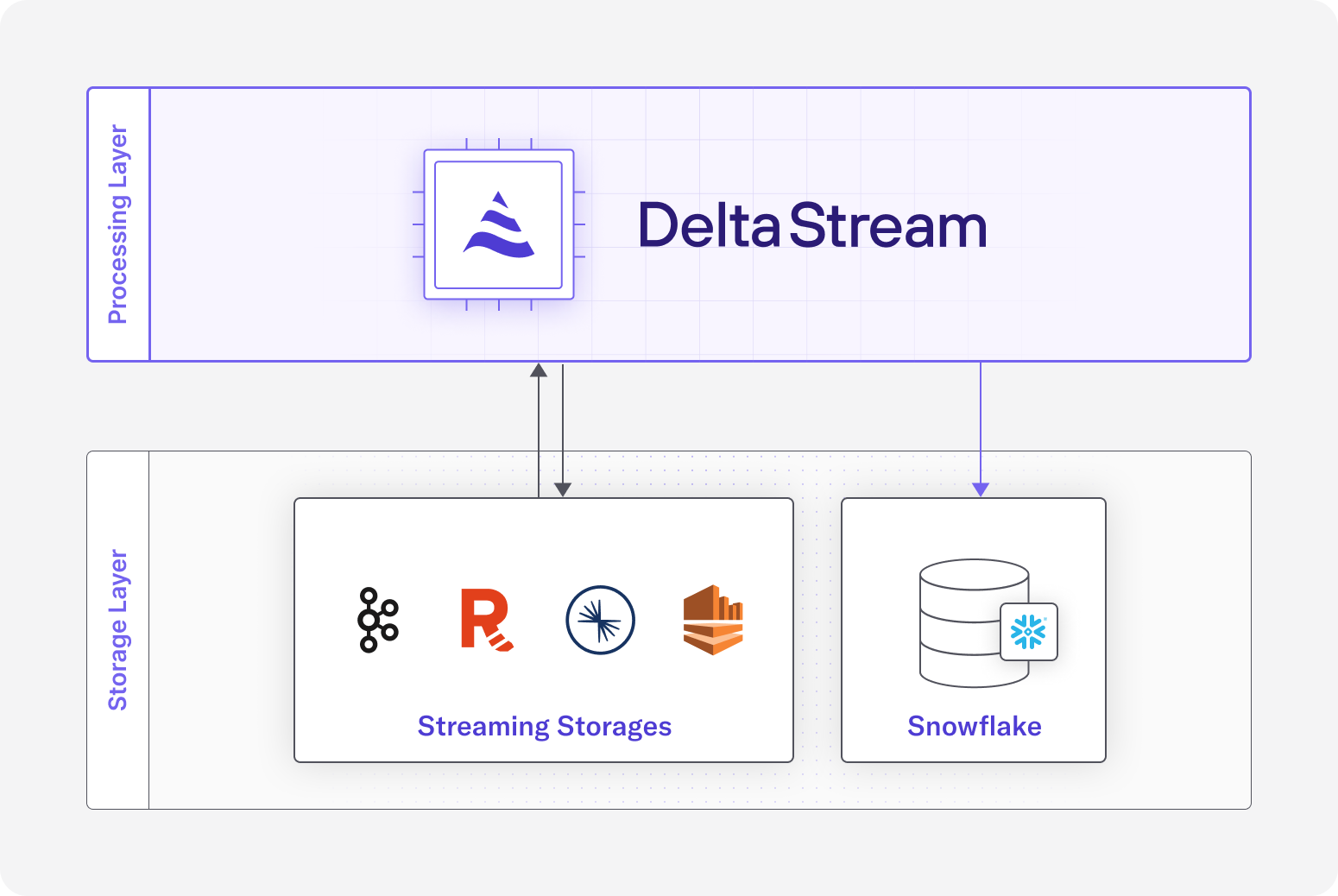

Snowflake is a popular data warehouse destination for streaming data. It allows users to process large amounts of data for a variety of purposes, such as dashboards, machine learning, and applications. However, to keep these use cases up to date, users need to keep the data fresh. DeltaStream’s Snowflake integration makes it easy to keep your data fresh in Snowflake with a simple SQL query.

How can I use Snowflake with DeltaStream?

Our new Snowflake integration provides a way to write pre-processed data into Snowflake without the need for storing the data in an intermediary storage:

Using DeltaStream queries, source(s) data can be transformed in the shape and form that best suits your Snowflake tables, then it can use your Snowflake Store and continuously write the resulting data in the target Snowflake table.

DeltaStream’s new Snowflake Store uses the Snowpipe Streaming APIs to provide the best streaming experience to your Snowflake account without any data loss. Stay tuned for more information on this in the upcoming blog posts.

How DeltaStream integrates with Snowflake

Creating a Snowflake Store

In DeltaStream, a Snowflake Store can be created for connectivity with a specific Snowflake Account. Use the SNOWFLAKE store type to create a new Snowflake Store:

Just like any other Store creation in DeltaStream, properties.file can also be used to offload the extra store information to a properties file. See Store Parameters for more information.

Once the Snowflake Store is created, you get access to any CRUD operation on databases, schemas, and tables on the specified Snowflake account without leaving your DeltaStream account.

For example, you can list available Snowflake databases:

Or operate on the Snowflake schemas and tables:

The Snowflake entities can also be described for additional information:

Materializing Streaming Data with Snowflake

Once your Snowflake Store has been created, you are ready to write queries that’ll enable you to apply any necessary computation to an upstream stream of data and continuously write the result of that computation to a corresponding Snowflake table.

Let’s take a look at a query where we count the number of transactions per type of credit card:

In this example, we’re using a CREATE TABLE AS SELECT (CTAS) query to let the system know that we want to create a Table in Snowflake under the “DELTA_STREAMING”.”PUBLIC” namespace with the name CC_TYPE_USAGE. Once the CTAS query is created, we can describe the new Snowflake table of the same name:

By creating the above CTAS query, we have started a process that’ll continuously count the number of credit card types that are used in our product transactions. The result set of this query appends a new row to the CC_TYPE_USAGE table for every new count for a specific cc_type, where we can use the last count for each type to perform required analysis for the business.

The result set can be previewed in the CLI:

Modern Streaming and Analytics

At DeltaStream we believe in providing a modern and unified stream processing solution that can unlock the full potential of your data, leading to improved operational efficiency, data security, and ultimately a competitive advantage for every organization when developing products. We hope that with the new Snowflake integration we open yet another door for providing data unity across your organization.

If you have any questions or want to try out this integration, reach out to us or request a free trial!