In today’s data-driven world, organizations are seeking effective and reliable ways to extract insights to make timely decisions based on the ever-increasing volume and velocity of data. ETL (Extract, Transform, Load) is a process where data is extracted from various sources, transformed to fit specific requirements, such as cleaning, formatting, and aggregating, and loaded into a target system or data warehouse. ETL ensures data consistency, quality, and usability, and enables organizations to effectively analyze their data. Traditional batch processing, while effective for certain use cases, falls short in meeting the demands of real-time and event-driven data processing and analysis. This is where streaming ETL emerges as a powerful solution.

Streaming ETL: An Overview

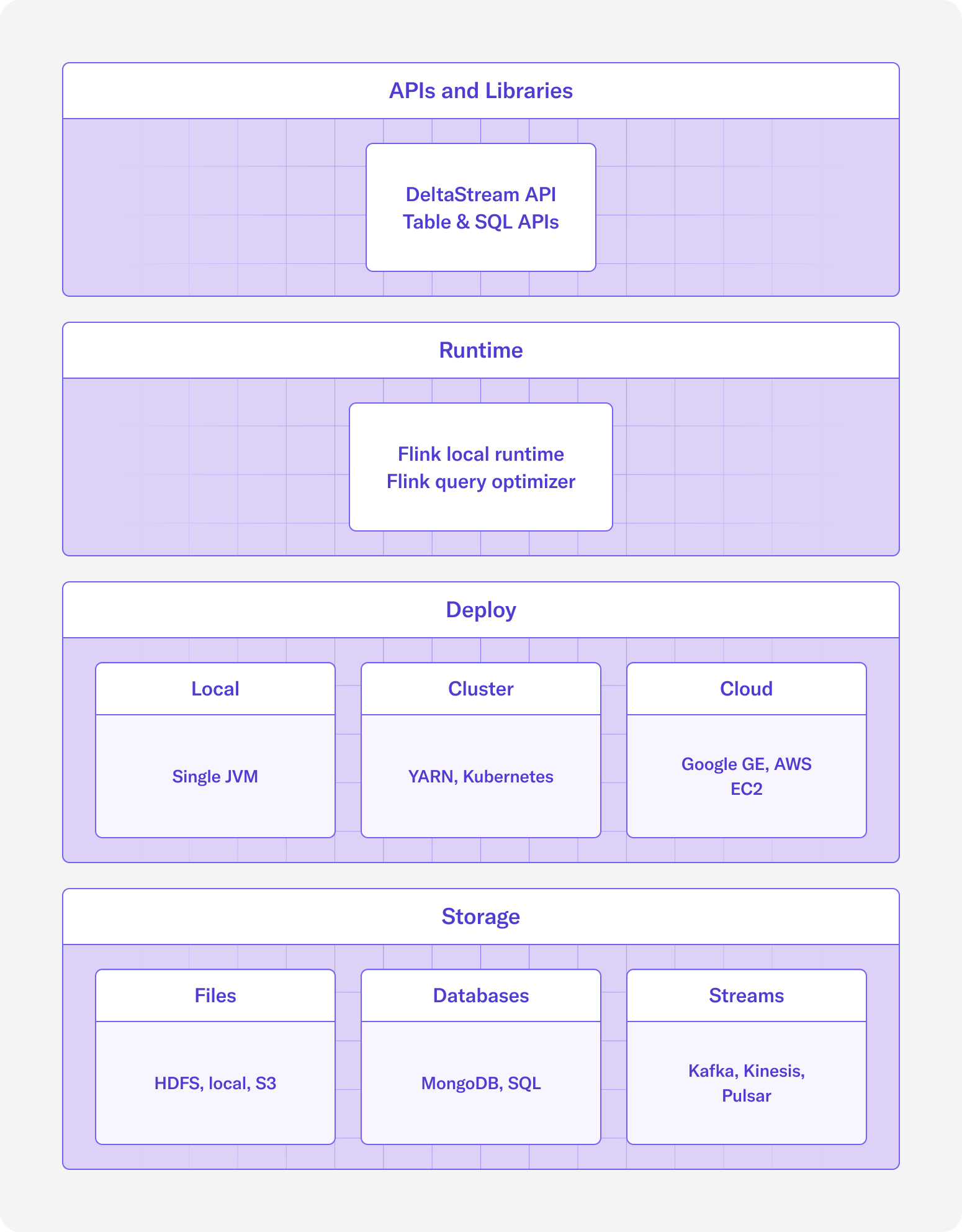

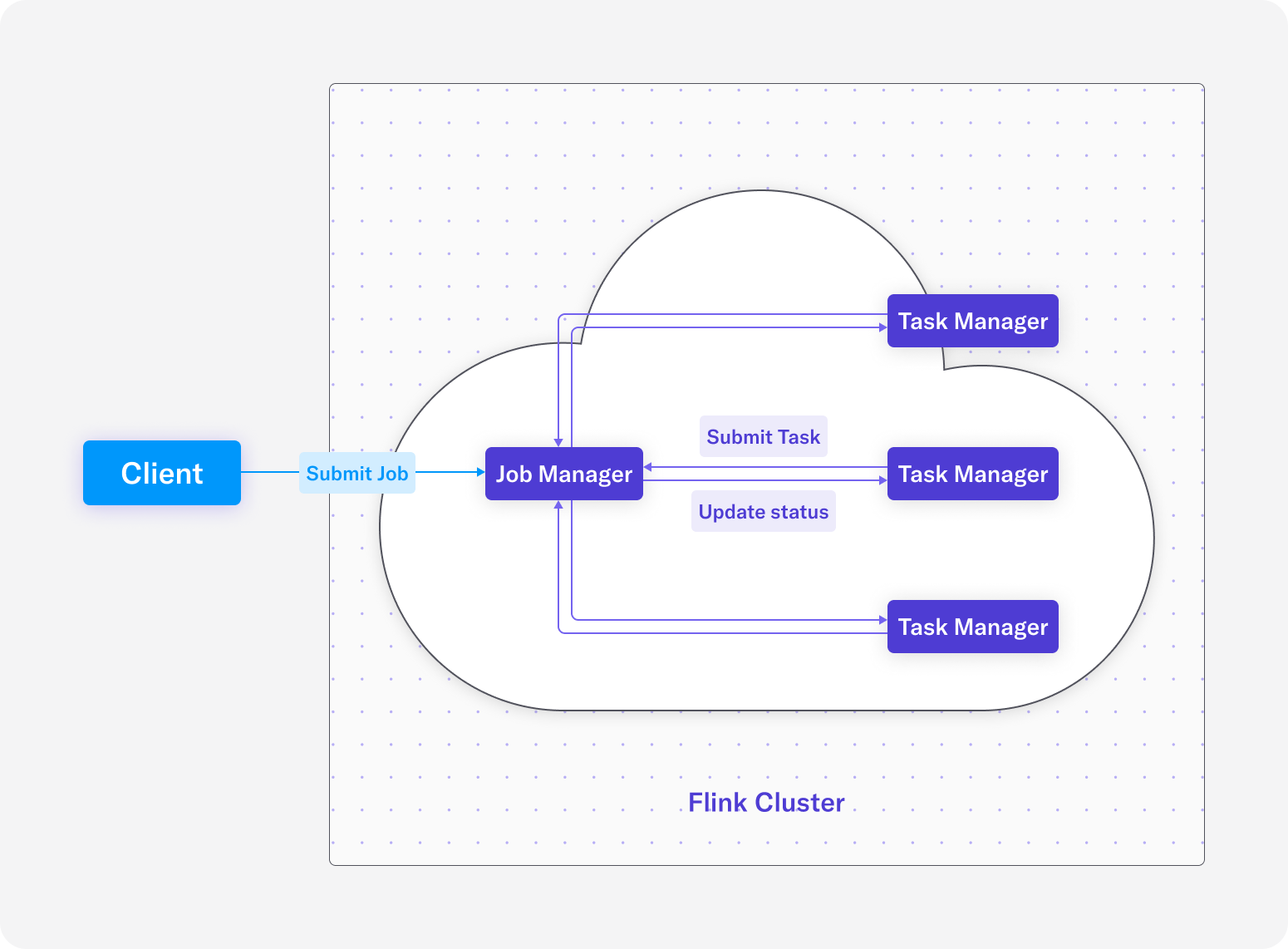

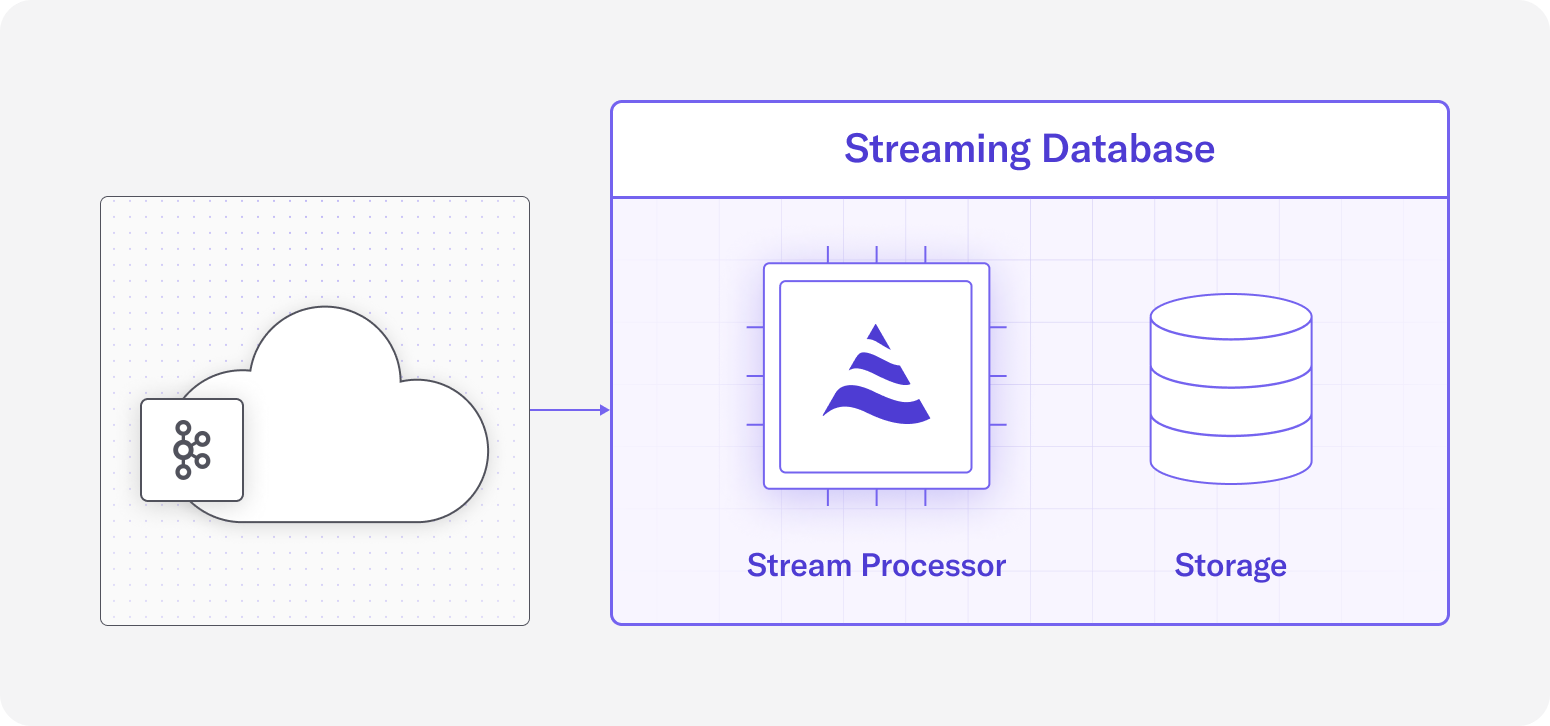

Unlike traditional batch processing, which operates on fixed intervals, streaming ETL operates on a continuous stream of data as records arrive, allowing for real-time analysis. A streaming ETL pipeline begins with the continuous data ingestion phase, in which records are collected from different sources varying from databases to event streaming platforms like Apache Kafka, and Amazon Kinesis. Once data is ingested, it goes through real-time transformation operations for cleaning, normalization, enrichment, etc. Stream processing frameworks such as Apache Flink, Kafka Stream, ksqlDB and Apache Spark provide tools and APIs to apply these transformations to prepare the data. The same frameworks allow processing data in real time and support operations and functionalities such as real-time aggregation, and complex machine learning operations. Finally, the results of the streaming ETL are delivered to downstream systems and applications for immediate consumption, or they are stored in data warehouses and data stores for future use.

Streaming ETL can be applied in various domains, including fraud detection and prevention, real-time analytics and personalization for targeted advertisements, and IoT data processing and monitoring to handle high velocity and volume of data generated by devices such as sensors and smart appliances.

Why should you use Streaming ETL?

Streaming ETL offers numerous advantages in real-time data processing. Here is a list of most important ones:

- Streaming ETL provides real-time insights into the emerging trends, anomalies and critical events in the data as they happen. It operates with low latency, and ensures the processing results are up to date. This reduces the gap between the time data arrives and the time it is processed. This facilitates accurate and timely decision-making, and enables organizations to capitalize on time-sensitive opportunities or address emerging issues promptly.

- Streaming ETL frameworks are designed to scale horizontally, which is crucial for handling increased data volumes and processing requirements in real-time applications. This elasticity allows for seamless scaling of resources based on demand, and enables the system to manage spikes in data volume and velocity without sacrificing the performance.

With all its advantages, Streaming ETL also presents some challenges:

- Streaming ETL process typically introduces additional complexity to data processing. Real-time data ingestion, continuous transformations, and persisting results while maintaining performance and data consistency require careful design and implementation.

- Streaming ETL pipelines run in a distributed streaming environment, which introduces new challenges to the data processing process. Unless an appropriate delivery guarantee such as exactly-once or at-least once is used, there is a risk of delay or data loss during ingestion, and delivery stages, due to parallel and asynchronous processing when applying transformations. Ordering events and maintaining data consistency are complex in such situations, and if not handled properly, they may impact the accuracy of certain computations that rely on the event order. Using fault-tolerant mechanisms, such as replication, checkpointing, and backup strategies are essential to prevent data loss and ensure reliability and correctness of results.

Using a modern stream processing platform, such as DeltaStream, can help address the above challenges and enable organizations to benefit from all the advantages of Streaming ETL.

Differences between Streaming ETL and Batch ETL

Data processing model: Batch ETL starts with collecting large volumes of data over a time period and processes these batches in fixed intervals. Therefore, it applies transformations on the entire dataset as a batch. Streaming ETL operates on data as it arrives in real-time and continuously processes and transforms data as individual records or small chunks.

Latency: Batch ETL introduces inherent latency since data is processed in intervals. This latency normally ranges from minutes to hours. Streaming ETL processes data in real-time and offers low latency. Results are available immediately and are updated continuously.

Data volume and velocity: Batch ETL is well-suited for processing large volumes of data, collected over time. Therefore it is effective when dealing with historical data. Streaming ETL, on the other hand, is designed for high-velocity data streams and is effective for use cases that require immediate processing.

Processing frameworks: Batch ETL typically utilizes frameworks like Apache Hadoop, Apache Spark and traditional ETL and data warehouses tools. These frameworks are optimized for processing large volumes of data in parallel, but not necessarily for real-time use cases. Streaming ETL leverages specialized stream processing frameworks such as Apache Flink, and Apache Kafka. These frameworks are optimized for processing continuous streams of data in real time. With recent changes, some of these frameworks, such as Apache Flink, are now capable of processing batch workloads too. As these efforts and improvements continue, the overlap between frameworks which process these workloads are expected to get bigger.

Fault tolerance: Batch ETL typically processes large volumes of data in fixed intervals. If a failure occurs, all the data within that batch may be affected which could lead into results written partially. This makes failure recovery challenging in Batch ETL as it involves cleaning partial results and reprocessing the entire batch. Removing partial results and state, and starting a new run is a complicated process and normally involves manual intervention. Reprocessing a batch is time-consuming and resource-intensive and can take long which may result in issues for processing the next batch as producing results for the current batch has fallen behind. Moreover, rerunning some tasks could have unexpected side effects which may impact the correctness of final results. Such issues need to be handled properly during a job restart.

Streaming ETL does not involve many jobs that run sequentially many times over time, but there is a single long-running job which maintains its state and does incremental computation as data arrives. Therefore, Streaming ETL is generally better equipped to handle failures and partial results. Given that results are generated incrementally in the Streaming ETL case, a failure does not force discarding already generated results and reprocessing sources from the beginning. Stream processing frameworks provide transaction-like processing, exactly-once semantics, and write-ahead logs, ensuring atomicity and data consistency. They have built-in mechanisms for fault recovery, handle out-of-order events, and ensure end-to-end reliability by leveraging distributed messaging systems.

Choosing Between Streaming ETL and Batch ETL

There are several factors to consider, when deciding between Streaming ETL and Batch ETL for a data processing use case. The most important factor is latency requirements. Consider the desired latency of insights and actions. If a real-time response is critical, then Streaming ETL is the correct choice. The other important factor is data volume and velocity along with the cost of processing. You should evaluate the volume of data and the rate it arrives at. Streaming ETL is capable of processing fast data immediately. However, due to its inherent complexity and higher resource demand, it is more difficult to maintain.

Streaming ETL also introduces challenges related to maintaining the correct order of events, especially in distributed environments. Batch ETL processes data in a much more deterministic and mostly sequential manner, which ensures data consistency and ordered processing. A modern stream processing platform is a viable solution to handle these challenges and difficulties when picking Streaming ETL as a solution. Finally, you need to consider how often the data sources evolve over time in your use case as that can impact the structure of incoming records. Data processing pipelines need to handle schema evolution properly to prevent disruptions and errors. Managing schema changes, versioning, and implementing schema inference mechanisms become crucial to ensure correctness and reliability. Using a stream processing framework enables you to address these changes in a streaming ETL pipeline, which is intended to run continuously with no interruption.

Conclusion

Choosing between streaming ETL and batch ETL requires a thorough understanding of the specific requirements and trade-offs of each. While both approaches have their strengths and weaknesses, they are effective in different use cases and for different data processing needs. Streaming ETL offers real-time processing with low latency and high scalability to handle high-velocity data. On the other hand, batch ETL is well-suited for historical analysis, scheduled reporting, and scenarios where near-real-time results are not critical. In this blog post, we covered the specifics as well as the pros and cons of each approach, and explained the important factors to consider when deciding which one to choose for a given data processing use case.

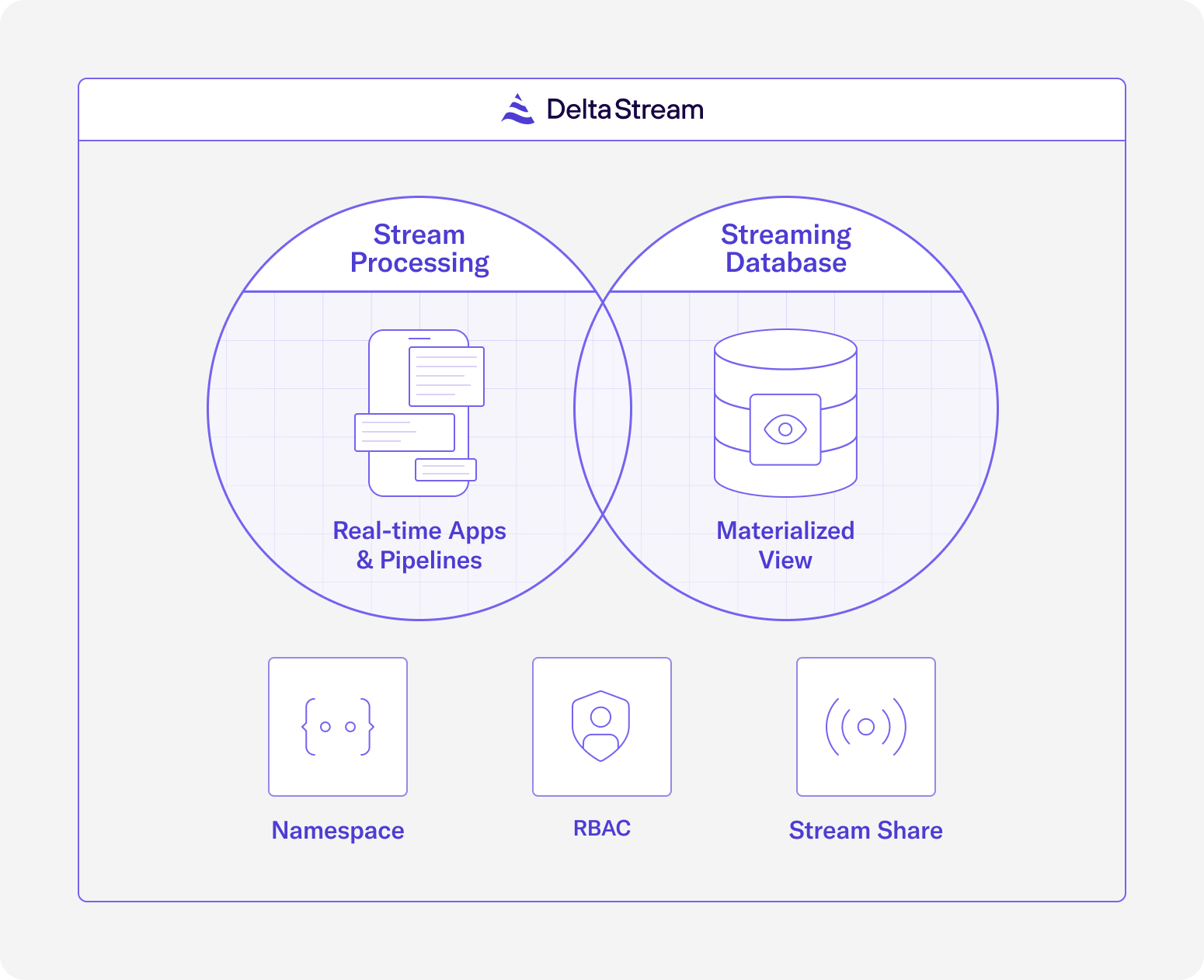

DeltaStream provides a comprehensive stream processing platform to manage, secure and process all your event streams. You can easily use it to create your streaming ETL solutions. It is easy to operate, and scales automatically. You can get more in-depth information about DeltaStream features and use cases by checking our blogs series. If you are ready to try a modern stream processing solution, you can reach out to our team to schedule a demo and start using the system.