02 Apr 2025

Min Read

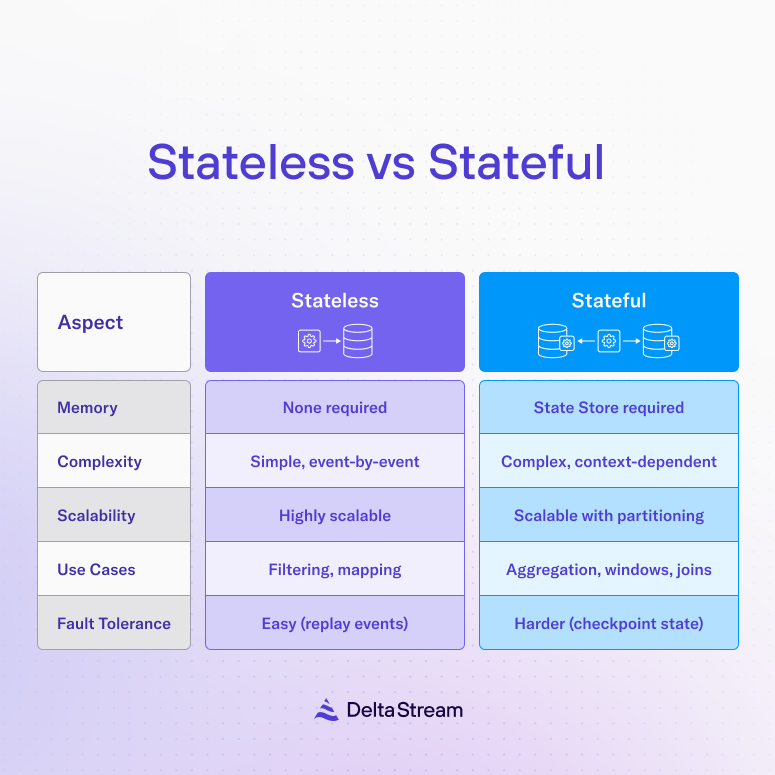

A Guide to Stateless vs. Stateful Stream Processing

Stream processing has become a cornerstone of modern data architectures, enabling real-time analytics, event-driven applications, and continuous data pipelines. From tracking user activity on websites to monitoring IoT devices or processing financial transactions, stream processing systems allow organizations to handle data as it arrives. However, not all stream processing is created equal. Two fundamental paradigms—stateless and stateful stream processing—offer distinct approaches to managing data streams, each with unique strengths, trade-offs, and use cases. This blog’ll explore the technical differences, dive into their implementations, provide examples, and touch on when each approach is most applicable.

What is Stream Processing?

First, a little background. Stream processing differs from traditional batch processing by operating on continuous, unbounded flows of data—think logs, sensor readings, or social media updates arriving in real time. Frameworks like Apache Kafka Streams, Apache Flink, and Spark Streaming provide the infrastructure to efficiently ingest, transform, and analyze these streams. The key distinction between stateless and stateful processing lies in how these systems manage information across events.

Stateless Stream Processing: Simplicity in Motion

Stateless stream processing treats each event as an isolated entity, processing it without retaining memory of prior events. When an event arrives, the system applies predefined logic based solely on its content and then moves on.

How Stateless Works:

- Input A stream of events, e.g.,

{user_id: 123, action: "click", timestamp: 2025-03-13T10:00:00}. - Processing Apply a transformation or filter, such as "if action == 'click', increment a counter" or "convert timestamp to local time."

- Output Emit the result (e.g., a metric or enriched event) without referencing historical data.

Technical Characteristics:

- No State Storage Stateless systems don’t maintain persistent storage or in-memory context, reducing resource overhead.

- Scalability Since events are independent, stateless processing scales horizontally easily. You can distribute the workload across nodes without synchronization.

- Fault Tolerance Recovery is straightforward—lost events can often be replayed without affecting correctness, assuming idempotency (i.e., processing the same event twice yields the same result).

Latency Processing is typically low-latency due to minimal overhead.

Example Use Case Consider a real-time clickstream filter that identifies and forwards "purchase" events from an e-commerce site. Each event is evaluated independently: if the action is "purchase," it’s sent downstream; otherwise, it’s discarded. No historical context is needed.

Implementation Example (Kafka Streams):

Trade-Offs: Stateless processing is lightweight and simple but limited. It can’t handle use cases requiring aggregation, pattern detection, or temporal relationships—like counting clicks per user over an hour—because it lacks memory of past events.

Stateful Stream Processing: Memory and Context

Stateful stream processing, in contrast, maintains state across events, enabling complex computations that depend on historical data. The system tracks information—like running totals, user sessions, or windowed aggregates—in memory or persistent storage.

How Stateful Works:

- Input The same stream, e.g.,

{user_id: 123, action: "click", timestamp: 2025-03-13T10:00:00}. - Processing Update a state store, e.g., "increment user 123’s click count in a 1-hour window."

- Output Emit results based on the updated state, e.g., "user 123 has 5 clicks this hour."

Technical Characteristics:

- State Management requires mechanisms to track state, such as key-value stores (e.g., RocksDB in Flink) or in-memory caches.

- Scalability More complex due to state partitioning and consistency requirements. Keys (e.g., user_id) are often used to shard state across nodes.

- Fault Tolerance State must be checkpointed or replicated to recover from failures, adding overhead but ensuring correctness.

- Latency Higher than stateless due to state access and updates, though optimizations like caching mitigate this.

Example Use Case: A fraud detection system that flags users with more than 10 transactions in a 5-minute window. This requires tracking per-user transaction counts over time—a stateful operation.

Implementation Example (Flink):

Trade-Offs: Stateful processing is powerful but resource-intensive. Managing state increases memory and storage demands, and fault tolerance requires sophisticated checkpointing or logging (e.g., Kafka’s changelog topics). It’s also prone to issues like state bloat (e.g., expiring old windows) if not properly managed.

When to Use Stateless vs. Stateful?

- Stateless: Opt for stateless processing when your application involves simple transformations, filtering, or enrichment without historical context. It’s ideal for lightweight, high-throughput pipelines where speed and simplicity matter.

- Stateful: Choose stateful processing for analytics requiring memory—aggregations (e.g., running averages), sessionization, or pattern matching. It’s essential when the "why" behind an event depends on "what came before."

Wrap-up

Stateless and stateful stream processing serve complementary roles in the streaming ecosystem. Stateless processing offers simplicity, scalability, and low latency for independent event handling, making it a go-to for straightforward transformations. In contrast, more complex and resource-heavy, stateful processing provides more advanced capabilities like time-based aggregations and contextual analysis, which are critical for real-time insights. Choosing between them depends on your use case: stateless for speed and simplicity, stateful for depth and memory. Modern frameworks often support both, allowing hybrid pipelines where stateless filters feed into stateful aggregators. Understanding their mechanics empowers you to design efficient, purpose-built streaming systems.